AI Intrusion Detection System

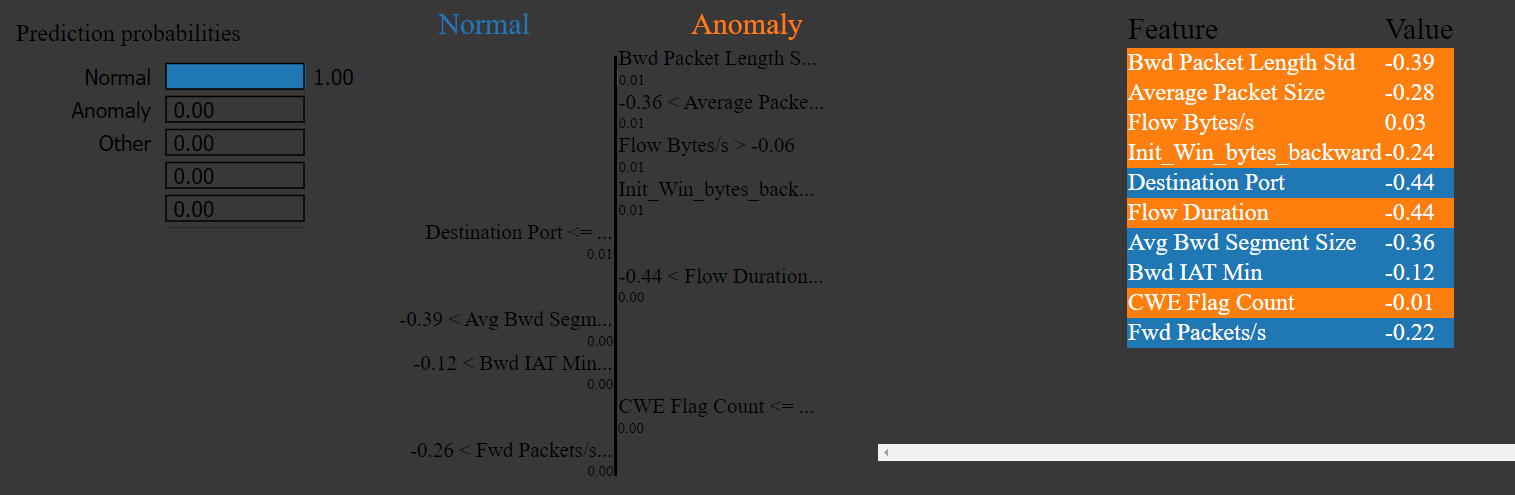

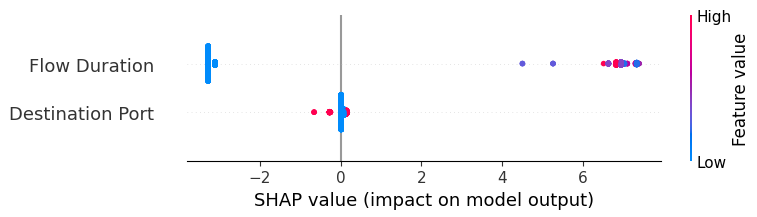

Hybrid anomaly detection with explainable AI using SHAP and LIME on the CIC IDS 2017 dataset.

PROJECT OVERVIEW

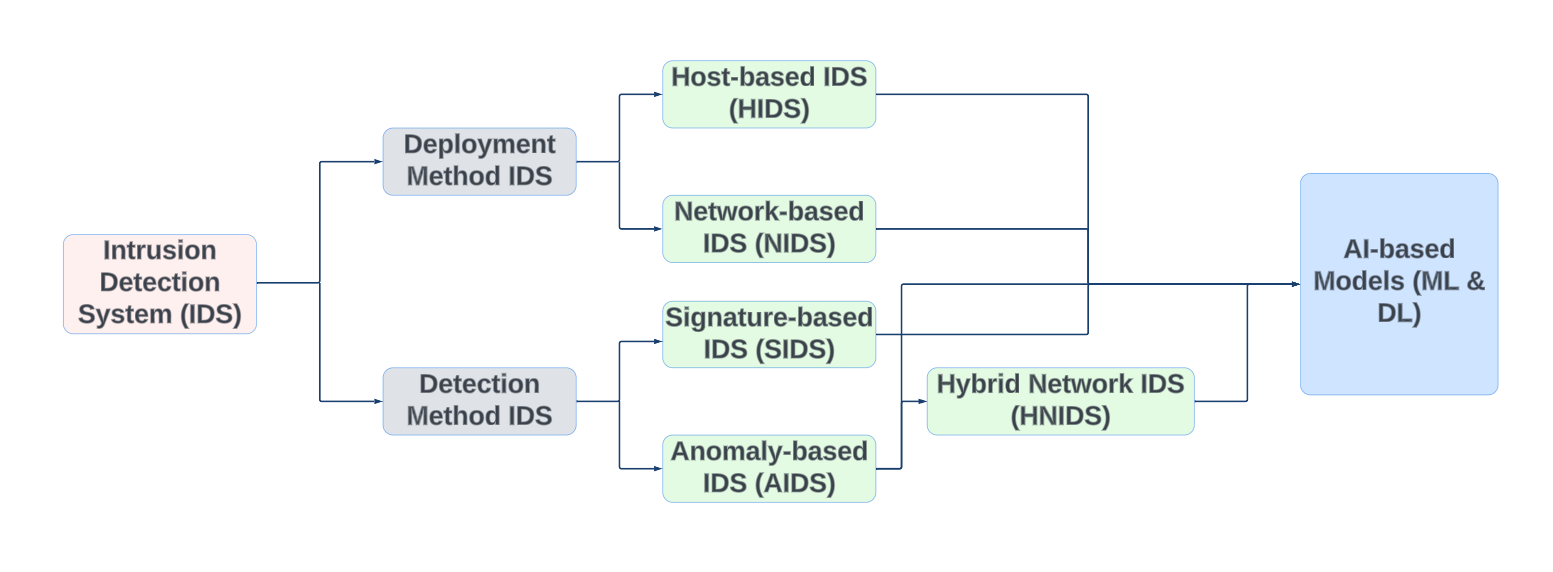

This system combines deep learning and traditional machine learning to detect network intrusions with high accuracy. The hybrid architecture integrates anomaly-based and signature-based detection, supported by explainability methods to interpret model decisions in real time.

FEATURES & IMPLEMENTATION

• Preprocessing pipeline with normalization, label encoding, and feature selection

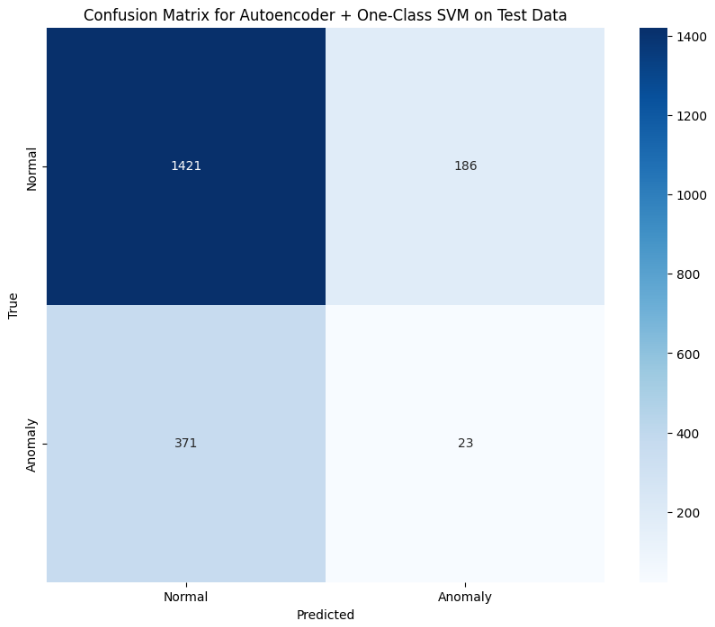

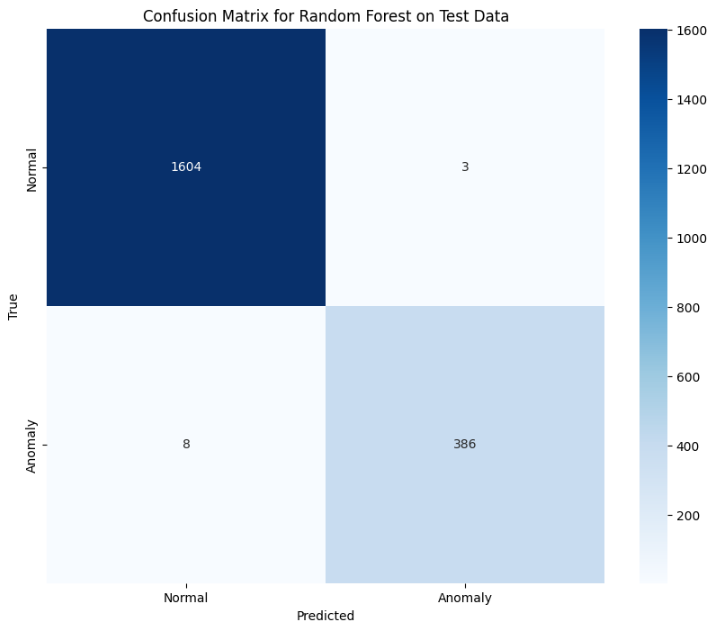

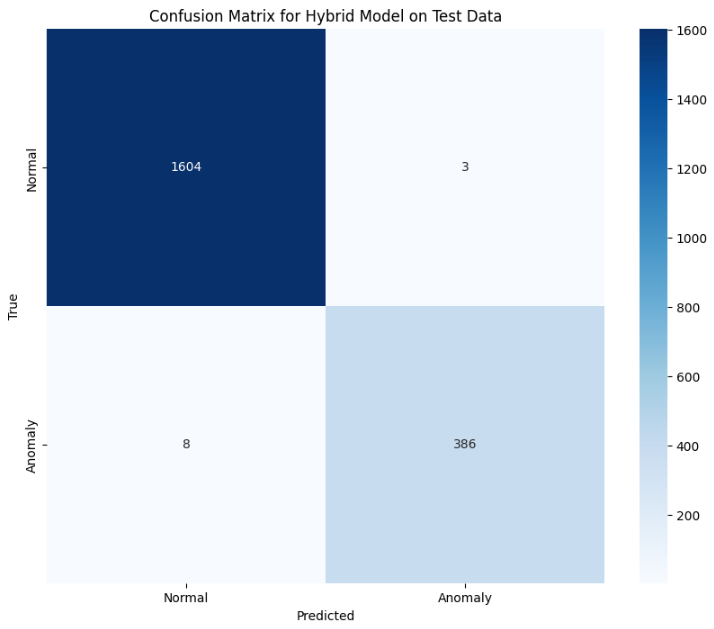

• Hybrid IDS using Autoencoder, One-Class SVM, and Random Forest

• Real-time prediction with confidence scores and model decision breakdowns

• SHAP and LIME integration for explainable AI visualization

TECHNOLOGIES USED

Python, Pandas, NumPy, Scikit-learn, TensorFlow, Keras, SHAP, LIME, Matplotlib, CIC IDS 2017 dataset

CHALLENGES

• Managing highly imbalanced datasets

• Balancing sensitivity and specificity across models

• Making model outputs understandable for non-technical users

LEARNINGS & IMPACT

This project sharpened my skills in designing interpretable machine learning systems. I learned how to build trustworthy models that are transparent, scalable, and effective for security-critical applications.

SCREENSHOTS & DIAGRAMS